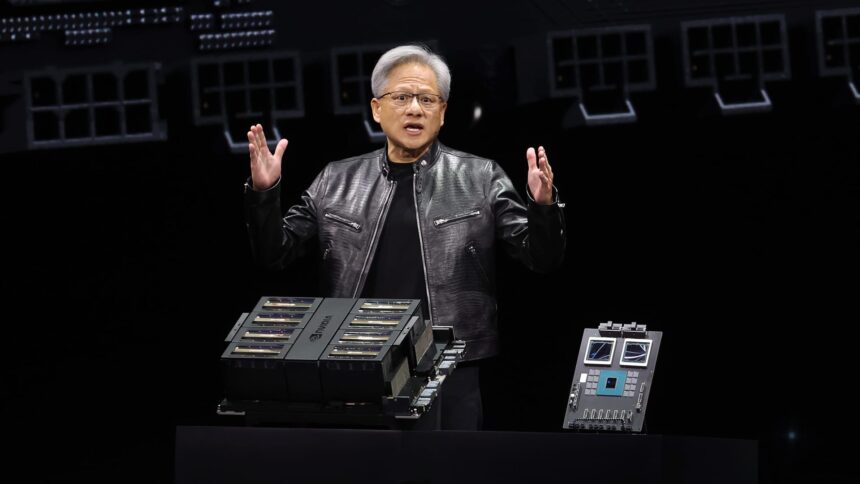

Nvidia CEO Jensen Huang delivers a keynote handle in the course of the Nvidia GTC Synthetic Intelligence Convention at SAP Heart on March 18, 2024 in San Jose, California.

Justin Sullivan | Getty Photographs

Nvidia on Monday introduced a brand new technology of synthetic intelligence chips and software program for working synthetic intelligence fashions. The announcement, made throughout Nvidia’s developer’s convention in San Jose, comes because the chipmaker seeks to solidify its place because the go-to provider for AI corporations.

Nvidia’s share value is up five-fold and whole gross sales have greater than tripled since OpenAI’s ChatGPT kicked off the AI increase in late 2022. Nvidia’s high-end server GPUs are important for coaching and deploying giant AI fashions. Corporations like Microsoft and Meta have spent billions of {dollars} shopping for the chips.

The brand new technology of AI graphics processors is known as Blackwell. The primary Blackwell chip is known as the GB200 and can ship later this 12 months. Nvidia is attractive its clients with extra highly effective chips to spur new orders. Corporations and software program makers, for instance, are nonetheless scrambling to get their arms on the present technology of “Hopper” H100s and comparable chips.

“Hopper is unbelievable, however we’d like larger GPUs,” Nvidia CEO Jensen Huang mentioned on Monday on the firm’s developer convention in California.

Nvidia shares fell greater than 1% in prolonged buying and selling on Monday.

The corporate additionally launched revenue-generating software program referred to as NIM that can make it simpler to deploy AI, giving clients another excuse to stay with Nvidia chips over a rising area of rivals.

Nvidia executives say that the corporate is turning into much less of a mercenary chip supplier and extra of a platform supplier, like Microsoft or Apple, on which different corporations can construct software program.

“Blackwell’s not a chip, it is the identify of a platform,” Huang mentioned.

“The sellable business product was the GPU and the software program was all to assist folks use the GPU in numerous methods,” mentioned Nvidia enterprise VP Manuvir Das in an interview. “In fact, we nonetheless try this. However what’s actually modified is, we actually have a business software program enterprise now.”

Das mentioned Nvidia’s new software program will make it simpler to run packages on any of Nvidia’s GPUs, even older ones that may be higher suited to deploying however not constructing AI.

“Should you’re a developer, you’ve got obtained an attention-grabbing mannequin you need folks to undertake, if you happen to put it in a NIM, we’ll guarantee that it is runnable on all our GPUs, so that you attain lots of people,” Das mentioned.

Meet Blackwell, the successor to Hopper

Nvidia’s GB200 Grace Blackwell Superchip, with two B200 graphics processors and one Arm-based central processor.

Each two years Nvidia updates its GPU structure, unlocking a giant bounce in efficiency. Most of the AI fashions launched over the previous 12 months had been skilled on the corporate’s Hopper structure — utilized by chips such because the H100 — which was introduced in 2022.

Nvidia says Blackwell-based processors, just like the GB200, provide an enormous efficiency improve for AI corporations, with 20 petaflops in AI efficiency versus 4 petaflops for the H100. The extra processing energy will allow AI corporations to coach larger and extra intricate fashions, Nvidia mentioned.

The chip contains what Nvidia calls a “transformer engine particularly constructed to run transformers-based AI, one of many core applied sciences underpinning ChatGPT.

The Blackwell GPU is giant and combines two individually manufactured dies into one chip manufactured by TSMC. It should even be accessible as a complete server referred to as the GB200 NVLink 2, combining 72 Blackwell GPUs and different Nvidia elements designed to coach AI fashions.

Nvidia CEO Jensen Huang compares the scale of the brand new “Blackwell” chip versus the present “Hopper” H100 chip on the firm’s developer convention, in San Jose, California.

Nvidia

Amazon, Google, Microsoft, and Oracle will promote entry to the GB200 by way of cloud providers. The GB200 pairs two B200 Blackwell GPUs with one Arm-based Grace CPU. Nvidia mentioned Amazon Net Providers would construct a server cluster with 20,000 GB200 chips.

Nvidia mentioned that the system can deploy a 27-trillion-parameter mannequin. That is a lot bigger than even the most important fashions, reminiscent of GPT-4, which reportedly has 1.7 trillion parameters. Many synthetic intelligence researchers imagine larger fashions with extra parameters and knowledge might unlock new capabilities.

Nvidia did not present a price for the brand new GB200 or the techniques it is utilized in. Nvidia’s Hopper-based H100 prices between $25,000 and $40,000 per chip, with complete techniques that value as a lot as $200,000, in keeping with analyst estimates.

Nvidia may even promote B200 graphics processors as a part of a whole system that takes up a complete server rack.

Nvidia inference microservice

Nvidia additionally introduced it is including a brand new product named NIM, which stands for Nvidia Inference Microservice, to its Nvidia enterprise software program subscription.

NIM makes it simpler to make use of older Nvidia GPUs for inference, or the method of working AI software program, and can enable corporations to proceed to make use of the a whole lot of thousands and thousands of Nvidia GPUs they already personal. Inference requires much less computational energy than the preliminary coaching of a brand new AI mannequin. NIM allows corporations that need to run their very own AI fashions, as a substitute of shopping for entry to AI outcomes as a service from corporations like OpenAI.

The technique is to get clients who purchase Nvidia-based servers to enroll in Nvidia enterprise, which prices $4,500 per GPU per 12 months for a license.

Nvidia will work with AI corporations like Microsoft or Hugging Face to make sure their AI fashions are tuned to run on all appropriate Nvidia chips. Then, utilizing a NIM, builders can effectively run the mannequin on their very own servers or cloud-based Nvidia servers with no prolonged configuration course of.

“In my code, the place I used to be calling into OpenAI, I’ll change one line of code to level it to this NIM that I obtained from Nvidia as a substitute,” Das mentioned.

Nvidia says the software program may even assist AI run on GPU-equipped laptops, as a substitute of on servers within the cloud.